A discussion of statistical significance is probably a bit above middle school level, but I’m posting a note here because it is a reminder about the importance of statistics. In fact, students will hear about confidence intervals when they hear about the margin of error of polls in the news and the “significant” benefits of new drugs. Indeed, if you think about it, the development of formal thinking skills during adolescence should make it easier for students to see the world from a more probabilistic perspective, noticing the shades of grey that surround issues, rather that the more black and white, deterministic, point of view young idealists tend to have. At any rate, statistics are important in life but, according to a Science Magazine article, many scientists are not using them correctly.

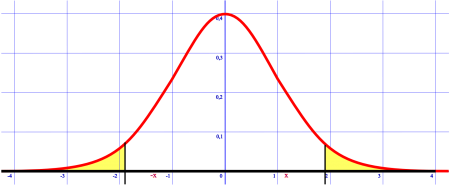

One key error is in understanding the term “statistically significant”. When Ronald A. Fisher came up with the concept he arbitrarily chose 95% as the cutoff to test if an experiment worked. The arbitrariness is one part of the problem, 95% still means there is one chance in twenty that the experiment failed and with all the scientists conducting experiments, that’s a lot of unrecognized failed experiments.

But the big problem is the fact that people conflate statistical significance and actual significance. Just because there is a statistically significant correlation between eating apples and acne, does not mean that it’s actually important. It could be that this result predicts that one person in ten million will get acne from eating apples, but is that enough reason to stop eating apples?

It is a fascinating article that deals with a number of other erroneous uses of statistics, but I’ve just spent more time on this post than I’d planned (it was supposed to be a short note). So I’d be willing to bet that there is a statistically significant correlation between my interest in an issue and the length of the post (and no correlation with the amount of time I intended to spend on the post).